Code of Practice on Transparency of AI-Generated Content

The Watermark of Sisyphus: A Meditation on the EU’s Latest Regulatory Masterpiece

There is something almost touching about the European Commission’s faith in bureaucracy. While the rest of the world races to build artificial general intelligence, Brussels has deployed its own formidable intelligence—entirely natural, one assumes—to produce a thirty-two page document explaining how to put a small “AI” sticker on a photograph.

The First Draft Code of Practice on Transparency of AI-Generated Content arrives with the fanfare one expects from a document boasting six chairs and vice-chairs (gender-balanced, geographically diverse, independently selected for their expertise in… chairing). It represents, we are told, a “unique” and “collaborative effort involving hundreds of participants.” One shudders to imagine what a non-collaborative effort might have produced. Perhaps only twenty-eight pages.

The Committee That Labels Committees

The document opens with the customary throat-clearing about “human-centric and trustworthy artificial intelligence” and the protection of “democracy, the rule of law, and environmental protection”—because nothing says environmental sustainability quite like mandating that every AI-generated image carry multiple layers of invisible watermarks, cryptographic signatures, perceptual hashes, metadata manifests, and fingerprinting logs. The carbon footprint of a single marked JPEG may soon rival that of a small Baltic nation.

But let us not be uncharitable. The drafters have identified a genuine problem: people cannot always distinguish AI-generated content from authentic material. Their solution? Require AI providers to embed so many markers that future archaeologists will be able to identify a 2026 cat meme by its seventeen distinct provenance layers.

The proposed “multi-layered approach” includes:

- Machine-readable metadata (in case the machines feel excluded)

- Imperceptible watermarks “interwoven within the content”

- Fingerprinting databases

- Logging facilities

- Digitally signed manifests

- Structural markings “encoded in the weights during model training”

- And, for the human beings this ostensibly serves, a tiny icon

One imagines the average citizen, confronted with a dubious photograph of a politician shaking hands with an alien, carefully extracting the C2PA metadata, cross-referencing the perceptual hash against the shared aggregated verifier, decoding the structural watermark, and finally—reassured—noting the small “AI” badge in the corner.

The Taxonomy of Deception

Working Group 2 has contributed what can only be described as a triumph of categorical thinking. Content must now be classified as either “Fully AI-generated” or “AI-assisted,” with the latter encompassing such granular distinctions as “beauty filters that change perceived age and/or emotional tone” and “colour adjustments that change contextual meaning (e.g. skin tone).”

One pauses to appreciate the specificity. Every teenager applying a Valencia filter to their Instagram selfie is now engaged in AI-assisted content manipulation that “may falsely appear authentic or truthful.” The implications for European beach photography alone are staggering.

The document helpfully clarifies that “seemingly small AI-alterations that change the context of the content (e.g. noise removal that makes it appear as though the interviewee is in a different setting)” require disclosure. Audio engineers who have spent decades removing background hum from recordings may wish to consult counsel.

The Icon Cometh

No EU regulation would be complete without a logo, and here the drafters have outdone themselves. The proposed icon—a circle containing the letters “AI”—has been subjected to what appears to be extensive deliberation. Figure 1 in the appendix proudly announces it was generated by “a zero-shot prompt on ChatGPT’s free version as of December 2025,” which rather undermines the solemnity of the enterprise.

The icon must be:

- “Clearly visible at the time of first exposure”

- “Placed in a position appropriate to the content format”

- Implemented “in a way that does not interfere with the enjoyment of artistic, creative, satirical or fictional works”

- Available in “two-letter acronym referring to artificial intelligence, which can also be letters referring to the translation into the languages of the Member States (e.g. AI, KI, IA)”

The possibility that “IA” might be confused with Iowa, or “KI” with potassium iodide, does not appear to have troubled the working groups.

For audio-only content, the solution is yet more elegant: “repeated audible disclaimers at the beginning and intermediate phases, and at the end of the content.” One envisions a deepfake podcast interrupted every few minutes by a sombre voice intoning: “The following content has been artificially generated or manipulated pursuant to Article 50(4) of Regulation (EU) 2024/1689.”

The Exception That Swallows the Rule

Buried within the labyrinthine text is a provision of almost comic breadth. AI-generated text requires no disclosure if it “has undergone a process of human review or editorial control and where a natural or legal person holds editorial responsibility.”

In other words: if a human reads the AI output before publishing it, no label is required.

One struggles to imagine the scenario this excludes. A rogue algorithm, having achieved sentience, autonomously publishes op-eds in Le Monde without any human ever clicking “submit”? A newspaper’s content management system becomes self-aware and begins generating articles about local council meetings? The drafters have apparently prepared for every contingency except plausibility.

Interoperability, or The Tower of Babel in Reverse

The Code devotes considerable attention to “interoperability”—the requirement that marking and detection systems work “across distribution channels and technological environments, regardless of the application domain or context.”

This is, in principle, admirable. In practice, it means that every AI provider in Europe must implement compatible watermarking schemes, shared verification infrastructure, and standardized metadata formats—while simultaneously ensuring that these systems are “robust” against “amortisation attacks,” “regeneration attacks,” and the ever-present threat of “typical processing operations such as mirroring, cropping, compression, screen capturing, paraphrasing, character deletions, changes in image or video resolution, pitch shifting, time stretching, or change of format.”

The document acknowledges, with refreshing candour, that “no single active marking technique suffices at the time of drafting the Code to meet the legal requirements.” Translation: the technology to comply with these rules does not exist. But the rules exist, and that is what matters.

The Proportionality Principle, Such As It Is

Throughout the document, the drafters gesture toward “proportionality for SMEs and SMCs.” Simplified compliance for startups, we are assured, “should be possible.”

The nature of this simplification remains mysterious. Measure 4.1 requires all deployers to “set up and implement internal processes to identify deepfake image, audio, video content” and determine whether exceptions apply. Measure 2.2 mandates “appropriate training to personnel” on taxonomy application, disclosure practices, accessibility requirements, and correction procedures. Measure 4.2.5 specifies different disclosure requirements for audio content “shorter than 30 seconds” versus “longer audio formats such as podcasts.”

One imagines a three-person startup in Tallinn, having just raised seed funding to build an image generation tool, now obligated to: implement multi-layered watermarking, establish fingerprinting databases, create detection APIs, train staff on a two-tier taxonomy, design accessible icons in multiple EU languages, and maintain compliance documentation for market surveillance authorities.

Simplification, it seems, is in the eye of the regulator.

The Fundamental Category Error

The deepest flaw in the Code of Practice is philosophical rather than technical. It assumes that the problem of AI-generated content is primarily one of labelling—that if only citizens knew which images were synthetic, they would make better epistemic decisions.

This is, at best, optimistic. The history of content warnings, from cigarette packages to cookie consent banners, suggests that humans are remarkably skilled at ignoring disclosures. The EU’s own GDPR consent mechanisms have trained an entire generation to click “Accept All” without reading. There is no reason to believe that an “AI” icon will fare better.

More fundamentally, the Code addresses a problem that is rapidly becoming obsolete. When every photograph has been AI-enhanced, when every text has been AI-edited, when the boundary between “authentic” and “synthetic” has dissolved into a continuum of machine-human collaboration—what exactly is being disclosed? That intelligence, artificial or otherwise, was involved in creation? This describes essentially all modern content.

The Road Ahead

The drafters invite “written feedback by 23 January 2026” and promise to “further refine” the Code in subsequent iterations. Given the document’s current scope, one trembles to imagine refinement. Perhaps a dedicated annex on the appropriate icon placement for AI-generated grocery lists. A sub-measure addressing deepfake memes in group chats. A harmonized taxonomy for distinguishing “satirical” from “analogous” works.

The European Union has produced, in this Code of Practice, a document of genuine ambition and considerable sincerity. It represents thousands of hours of labour by well-intentioned experts genuinely concerned about the integrity of the information ecosystem.

It will not work.

Not because the drafters are foolish—they are not—but because they are attempting to regulate a phenomenon that moves faster than regulation, using tools designed for a world that no longer exists. By the time the common EU icon has been “further explored following usability tests and interoperability requirements,” the AI systems it aims to label will have evolved beyond recognition.

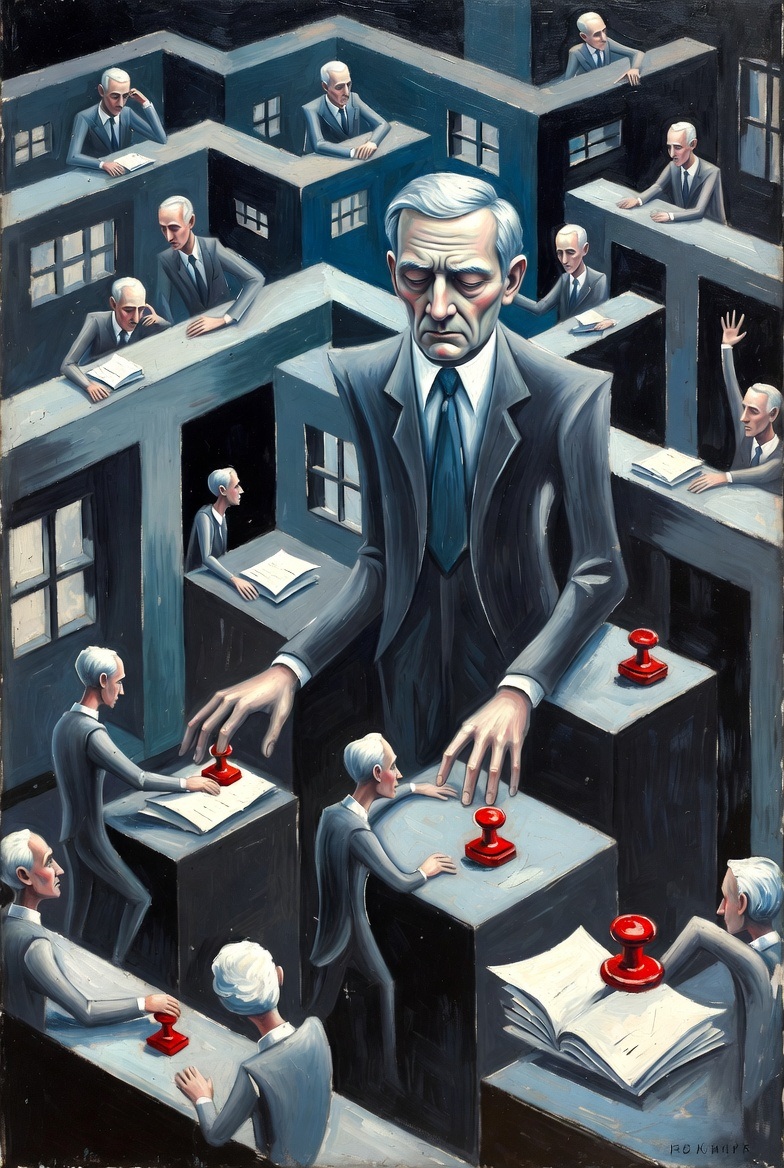

But the working groups will meet, the taxonomies will multiply, and somewhere in Brussels, a chair or vice-chair will add another sub-measure to the ever-growing edifice. It is, in its way, a very human response to an inhuman challenge.

One might even call it touching.

My further writtings on AI

The Illusion of Mind

The Illusion of Mind: How We Anthropomorphize Artificial Intelligence — in our enthusiasm for proclaiming technological progress, we’ve developed a peculiar habit of attributing human-like qualities to machines. When a chatbot pretends to think, discover why the latest language models can convincingly simulate reasoning while merely performing statistical pattern-matching.

Explore “L.L.M.—The Dunning-Kruger Effect in Silicon” — this cognitive distortion, in which individuals with limited knowledge overestimate their competence, has found its most sophisticated manifestation not in human psychology but in the neural architectures of large language models. The greater AI’s confidence, the smaller ours should be.

“The Consciousness Enigma: Philosophical Zombies and the Future of Artificial Intelligence” awaits resolution — in the vast landscape of philosophical inquiry, few concepts have provoked such profound debate. Can AI be conscious, or does it merely pretend so convincingly that the distinction ceases to matter?

“Consciousness as a Quantum Phenomenon: The Mind’s Quantum Signature” — your brain weighs three pounds and runs on twenty watts, less energy than a light bulb. Yet this three-pound organ delivers something no supercomputer can replicate.

Discover “The Mechanical Oracle: The Philosophical Crisis of Artificial Minds” — when we examine the recently revealed DeepSeek system prompt, uncovered through Wallarm’s security research, we confront the crisis of artificial minds that converse like humans but comprehend nothing of what they say.

“When Machines Judge Their Own Souls“—in the gleaming laboratories of New York University, researchers conduct what might be called mechanical therapy sessions: they ask artificial intelligence about its inner experiences, request introspection.

The Limits of Knowledge

“The Turing Test in the Age of Stochastic Parrots: A Critical Reëxamination” — in nineteen-fifty, Alan Turing proposed what would become one of the most influential and debated tests in the history of artificial intelligence. A test that can be passed through pretense loses its meaning.

Read about “The AI Uncertainty Principle: L.L.M.s Know They’re Guessing, but Won’t Tell You” — ever notice how ChatGPT sounds equally confident explaining quantum physics as it does recommending glue for pizza? This isn’t coincidence. Genuine uncertainty hidden behind a façade of certainty.

“The AI Reasoning Mirage: When Models Think One Thing but Say Another” — when you ask AI to solve a math problem and it walks you through its reasoning step by step, what if the entire reasoning chain is fabricated after it already knows the answer?

Immerse yourself in “Information’s Awakening: From Medieval Logos to Wheeler’s Quantum Bits” — in nineteen-eighty-nine, John Archibald Wheeler, one of America’s most influential theoretical physicists, proposed a radical idea: reality isn’t fundamental — information is.

“The Futility of Far-Future Forecasting” — in a world mesmerized by technological progress, there exists a peculiar obsession with precisely predicting when machines will achieve consciousness.

The Truth About the AI Industry

Behind the gleaming presentations and astronomical valuations lies a brutally simple reality: most of today’s AI promises constitute a magical money-generating machine.

Silicon Valley Illusions

“Silicon Valley’s Magical Money Machine: How GPT-4.5 Turns Vague Promises into Venture Capital Gold” — in Silicon Valley’s relentless pursuit of dazzling investors with technological superlatives, OpenAI has outdone itself again. Discover the mechanics by which imprecise visions become worth billions.

“Tipping the Scales of Reality: How AI Research Exposes the Industry’s Billion-Dollar Illusion” — in a recent report that sent ripples through Silicon Valley’s carefully cultivated reality distortion field, a group of academic researchers presented something extraordinary: actual data.

“Anthropic’s Landmark Settlement: A $1.5 Billion Bet on AI’s Future” — in the summer of twenty twenty-five, while Silicon Valley’s artificial-intelligence companies celebrated their latest breakthroughs, Anthropic reached a settlement that may define the industry’s future.

Controversies and Critical Junctures

“The Stochastic Parrot’s Dilemma: Why the Grok Controversy Misses the Point” — the hysteria surrounding Grok’s brief foray into controversial territory reveals a fundamental misunderstanding of what large language models are.

“When Your Chatbot Speaks Fluent Doublethink” — The Bilingual Blues: When Machines Learn to Parrot in Two Languages. The American Security Project published a report that should trouble anyone who believes in AI transparency.

“Kumbaya AI.: Why Singing Digital Campfire Songs with China Won’t Work (Or: How I Learned to Stop Worrying and Love the AI Arms Race)” — a response to M.I.T. Technology Review’s technological “peace plan”. The naïveté of technological pacifism in an era of geopolitical rivalry.

Law Confronts AI: When Legal Systems Meet the Machine

The law was never prepared for AI Centuries of principles about authorship, liability, and consciousness suddenly lose their footing.

Legal and Philosophical Foundations

“Beyond the Illusion: What Every Lawyer Must Understand About AI’s Fundamental Limitations” — imagine a person in a sealed room, receiving papers with Chinese characters through a slot. Before you trust AI in legal matters, you must understand why it cannot truly “understand” law.

“The Silent Surrender: Judicial Cognitive Atrophy in the Age of AI” — as courts worldwide adopt AI tools to enhance efficiency and consistency, they face an insidious paradox. When judges stop thinking and start trusting algorithms.

“The Recursive Corruption of Legal Knowledge: When AI Hallucinations Become Part of Legal Understanding” — the scale of AI-generated legal misinformation is no longer theoretical—it has measurable, documented consequences. A self-fulfilling prophecy of systemic errors.

“Machine Consciousness? Why We Cannot Trust AI’s Self-Reports” — I’ve spent considerable time outlining the fundamental differences between biological and artificial intelligence, but now we must confront an even more disturbing question.

Landmark Cases and Decisions

“Bartz v. Anthropic PBC (3:24-cv-05417): When AI Meets Copyright—Dissecting Judge Alsup’s Landmark Decision” — on June 23, 2025, Judge William Alsup issued the first federal ruling on whether training AI on copyrighted works constitutes fair use.

“AI in Court: The Global Copyright Revolution and the Verdicts That Will Change Everything” — in March, twenty twenty-five, the U.S. Court of Appeals for the D.C. Circuit issued a landmark ruling on the Copyright Office’s position regarding AI-generated creativity.

“The Illusion of Control: A Critical Analysis of the Copyright Office’s Position on AI Authorship” — the U.S. Copyright Office’s January, twenty twenty-five, report on AI-generated works represents a pivotal moment. Can a machine be an author?

“The Artistic Pretensions of Silicon Valley: On AI, Copyright, and the Death of Fair Use” — in the grand theater of technocapitalism, the most peculiar spectacle unfolds: OpenAI, with all the pomp of a treasure-holder, claims that creating billions of dollars in value through mass copying of others’ work constitutes “transformative” fair use.

“The Day the Machines Lost Their Voice: Why AI Chatbots Aren’t Protected Speech” — in a groundbreaking ruling that could reshape the digital landscape, a Florida federal judge just ruled that AI chatbots lack First Amendment rights.

“Artificial Intelligence and Copyright Protection” — a comprehensive look at how AI forces us to redefine intellectual property in an era when machines create more than humans.

Privacy and Privilege

“The Coming Collision: Why AI Communication Privilege Is Inevitable” — the law moves slowly until it doesn’t. For years, courts dismissed the idea that digital communications deserved special protection — until they recognized that e-mail deserved the same privacy expectations as paper letters.

“Big Brother Knows What You Prompt: Privacy in the Age of AI” — Forbes revealed a case from Maine—United States v. [REDACTED] — that should send a chill down the spine of anyone who’s ever conversed with an AI chatbot.

The Psychology of Machines and Humans

AI doesn’t merely process data — it shapes people, their behaviors and beliefs, in ways we don’t yet fully comprehend.

Manipulation and Influence

“The Psychology of Machine Manipulation: When AI Models Fall to Ancient Human Exploits” — recent security research from Cisco reveals something unsettling about artificial intelligence: the same psychological manipulation techniques that bypass human judgment work with devastating efficiency against AI systems.

“AI Supply Chains & Inception 2.0: Planting Ideas in the AI Subconscious” — the National Institute of Standards and Technology’s recently published “Adversarial Machine Learning” taxonomy reveals something fascinating: we can plant ideas in AI’s “subconscious.”

“How Language Models View World Affairs: The Hidden Power of AI Training Bias” — in a study that reads like a diplomatic summit between artificial intelligences, researcher Sinan Ülgen conducted a fascinating experiment.

AI Psychology

“AI Nepotism—Study That Reveals AI’s Tribal Instincts” — a research paper published in July in the Proceedings of the National Academy of Sciences contains findings that should make anyone using AI assistants pause mid-prompt.

Regulations and Global Standards

When governments attempt to rein in AI, fundamental divergences in their visions of the future emerge.

AI Geopolitics

“President Trump’s AI Action Plan: How the E.U. Lost the Battle for Global Standards” — the collision between America’s AI Action Plan and the European Union’s AI Act represents more than regulatory divergence. The Brussels Effect shattered against American technological pragmatism.

Social and Cultural Consequences

AI isn’t merely technology — it’s a mirror reflecting our deepest convictions, fears, and hopes.

Panic and Reactions

“The Great AI Text Generation Panic: A Modern Witch Hunt” — in the hallowed halls of academia, a curious inversion is taking place. Just as medieval monks feared that printing would destroy the culture of memory.

“Why Asking Your AI L.L.M. Chatbot for Guidance Is Like Consulting a Ouija Board” — the AI frenzy — millions of otherwise rational people began asking autocomplete machines for life advice, strategic guidance, and profound wisdom.

Intelligence Without Maturity

“Intelligence Without Maturity: The Philosophical Void in AI Ethics” — in January, twenty seventeen, at the historic Asilomar Conference Grounds in California, over a hundred leading AI experts, ethicists, and researchers gathered to confront one of our era’s most pressing questions.

Founder and Managing Partner of Skarbiec Law Firm, recognized by Dziennik Gazeta Prawna as one of the best tax advisory firms in Poland (2023, 2024). Legal advisor with 19 years of experience, serving Forbes-listed entrepreneurs and innovative start-ups. One of the most frequently quoted experts on commercial and tax law in the Polish media, regularly publishing in Rzeczpospolita, Gazeta Wyborcza, and Dziennik Gazeta Prawna. Author of the publication “AI Decoding Satoshi Nakamoto. Artificial Intelligence on the Trail of Bitcoin’s Creator” and co-author of the award-winning book “Bezpieczeństwo współczesnej firmy” (Security of a Modern Company). LinkedIn profile: 18 500 followers, 4 million views per year. Awards: 4-time winner of the European Medal, Golden Statuette of the Polish Business Leader, title of “International Tax Planning Law Firm of the Year in Poland.” He specializes in strategic legal consulting, tax planning, and crisis management for business.