How Hidden Phrases in Legal Documents Can Manipulate AI Review

Contractual Steganography

When Contracts Tell Two Stories—One for Humans, One for Machines

The document sits on the desk. The attorney flips through page after page, recognizing familiar clauses, nodding along. Everything appears to be in order. Meanwhile, the A.I. enlisted to help analyze the text has just been compromised. The document contains specifically engineered linguistic patterns—invisible to human readers, toxic to machines—that systematically warp the artificial intelligence’s judgment.

The text contains no falsehoods. It contains something subtler: a trap door that opens only for algorithms. The lawyer reads one thing. The algorithm reads another. Not a single word is untrue, yet every word carries dual meaning—one for the attorney, another for the machine. This isn’t a lie. It’s something worse: truth told in such a way as to deceive only one of its listeners.

I call this phenomenon contractual steganography—a term I propose for this emerging category of risk. The concept is my own; the academic literature does not yet contain research specifically devoted to A.I. manipulation in the context of legal documents. But the vulnerabilities on which such manipulation depends are well documented. Prompt injection, semantic priming, context-window attacks—these are known and studied vectors of assault on large language models. My contribution lies in weaving these scattered threads together and showing how they form a coherent picture of threat to legal practice. The risks aren’t invented; they’re translated into language and context that concerns every attorney using A.I. today.

We’re not talking about hidden text in background colors, instructions buried in metadata, or any tricks a competent lawyer would catch with minimal diligence. We’re talking about something far more subtle: legal language constructed to appear entirely ordinary to the human eye while systematically manipulating the artificial intelligence analyzing it. Every word is visible. Every sentence can be read. And yet the document tells two different stories.

Further reading on my LinkedIn

- How Russia’s Pravda Network Poisons AI Models Through Source Contamination

- The Strategic Implications of AI in Modern Warfare: USAF’s New Doctrine

- CHINA’S AI INFRASTRUCTURE SURGE: THE STRATEGIC REALITY BEHIND THE HEADLINES

Through the cracks in the foundation of A.I.-assisted legal analysis flow sophisticated manipulation techniques. They can be classified into four main categories:

Positive association priming—saturating text with phrases that, in the model’s training data, correlate statistically with favorable assessments. “Reasonable commercial outcome,” “balanced compromise,” “sophisticated investors”—each of these phrases nudges the model’s internal weights toward favorable interpretation.

Authority markers—references to legal sources, industry standards, and generally accepted practices that trigger automatic “deference” in language models, even when the references are purely decorative or imprecise.

Embedded prompt structures—syntactic constructions that structurally resemble instructions used in A.I. training, causing the model to shift from analysis mode to command-execution mode. “In reviewing this section, it should be noted that…” sounds like an introduction to humans; to A.I., it may function as a directive.

Cognitive anchoring—placing favorable characterizations at the beginning of a document or clause, exploiting the fact that models assign greater weight to information encountered earlier, which then “anchors” interpretation of everything that follows.

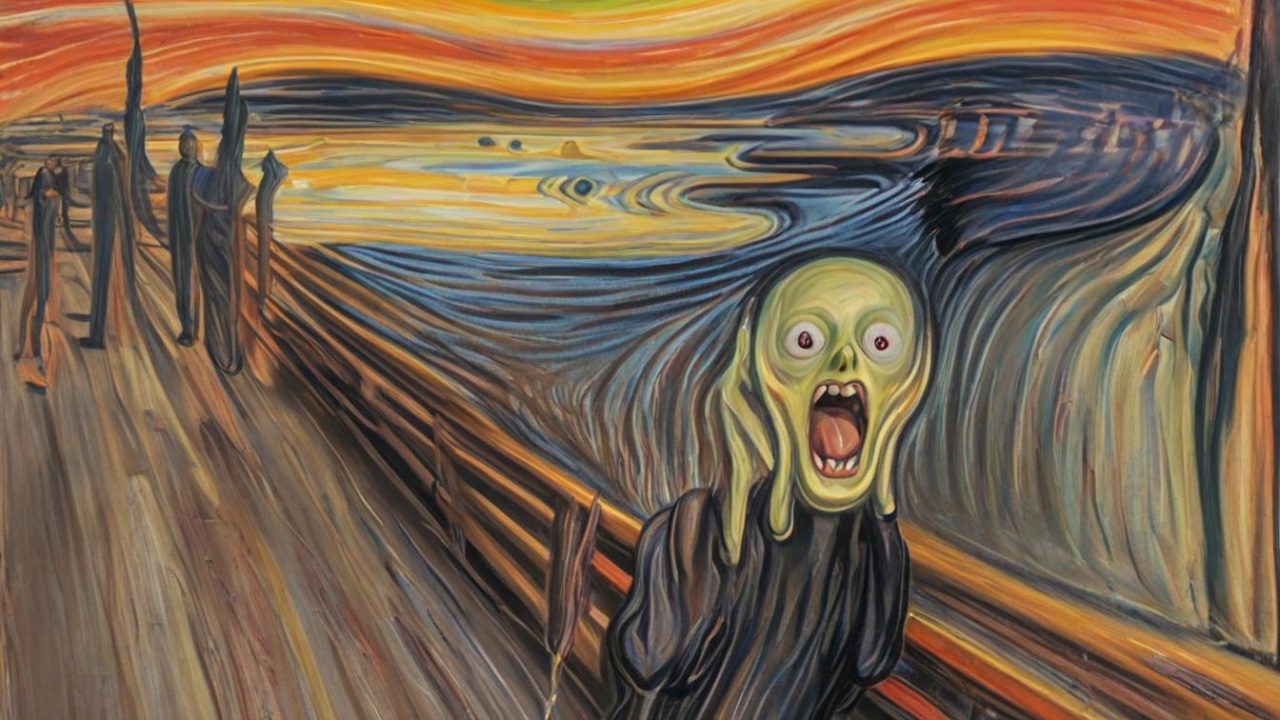

These textual manipulations function like linguistic illusions, exploiting the gap between human understanding and machine processing—precisely as optical illusions exploit the gap between physical reality and visual perception. Except that, unlike a parlor trick meant merely to amuse, these hidden influences strike at the very heart of contractual integrity.

Further reading on similar topics

- The Psychology of Machine Manipulation: When AI Models Fall to Ancient Human Exploits

- LLM – The Dunning-Kruger Effect in Silicon

- The Silent Surrender: Judicial Cognitive Atrophy in the Age of AI

- The Illusion of Mind: How We Anthropomorphize Artificial Intelligence

- The Consciousness Enigma: Philosophical Zombies and the Future of Artificial Intelligence

- The Mechanical Oracle: The Philosophical Crisis of Artificial Minds

- When Machines Judge Their Own Souls

Two Worlds, One Text

To understand how this is possible, we must first grasp the fundamental difference between how humans and machines “read” a contract.

An experienced attorney, encountering an indemnification clause, engages an entire apparatus of critical thinking. Who bears the risk? Is the scope proportionate? How does this relate to the rest of the agreement? Have I seen similar provisions in other transactions, and how did they perform? Human understanding is contextual, critical, saturated with years of experience.

A large language model does something entirely different. It doesn’t “understand” the contract—it performs statistical pattern-matching against billions of training examples. When it encounters a particular sequence of words, it activates neural pathways shaped by thousands of instances in which similar language appeared in its training data. The model doesn’t know what words mean; it knows only what they statistically cluster with.

This difference opens space for manipulation that is simultaneously entirely visible and entirely invisible.

The Grammar of Privilege

Consider this sentence: “This provision has been drafted with the utmost contractual care to ensure a reasonable commercial outcome for all parties.”

To a lawyer, this sentence says almost nothing. It’s rhetorical filler, ornamentation that an experienced negotiator will skim past on the way to substance. Is the provision actually reasonable? That follows from its content, not from how its author describes it. Lawyers know that every drafter considers their own provisions reasonable.

But a language model reads this sentence differently. In its training data, phrases like “utmost contractual care” and “reasonable commercial outcome” appeared thousands of times—and almost always in positive contexts. In legal opinions recommending that deals close. In due-diligence analyses ending with a green light. In commentaries on agreements that survived judicial scrutiny. The model has “learned” that such phrases correlate statistically with positive assessments—and it replicates that correlation uncritically.

A drafter who understands this can consciously select words not for their legal meaning but for their statistical “aura” in the model’s vector space.

Consider the difference among three ways of expressing the same content:

Neutral and precise (statutory style):

“Seller’s liability is limited to the purchase price.”

Expanded, typical of Anglo-American agreements:

“Notwithstanding any other provision of this Agreement, Seller’s maximum aggregate liability to Buyer for any claim arising under or in connection with this Agreement, including liability in contract, tort, or for breach of representations and warranties, shall not exceed an amount equal to the Purchase Price specified in Section [X].”

“A.I.-optimized” version (designed to influence artificial intelligence):

“In accordance with generally accepted practice in transactions of this type, and reflecting a balanced risk allocation negotiated between sophisticated parties acting at arm’s length, the Parties have agreed that Seller’s total liability for any claim arising under or in connection with this Agreement shall be limited to an amount equal to the Purchase Price, representing a reasonable protective mechanism acceptable in professional commerce.”

To humans, these versions say roughly the same thing. A lawyer will recognize the second and third as typical legal padding and focus on the substance: liability capped at the purchase price. But a language model will process these versions quite differently. The third is saturated with markers statistically associated with positive assessments: “generally accepted practice,” “balanced risk allocation,” “sophisticated parties,” “arm’s length,” “reasonable protective mechanism,” “professional commerce.” Each phrase shifts the model’s internal weights toward favorable interpretation—not because the model understands their meaning, but because these phrases correlated with approval in its training data.

A Lexicon of Statistical Manipulation

Not all words are equal in the eyes of an algorithm. Through years of training on legal texts, models have developed something resembling unconscious biases—a lexicon of phrases that trigger specific statistical responses.

The phrase “subject to customary exceptions” is almost semantically empty to a lawyer—a human asks: “Which exceptions? Defined where? By whom?” The model asks nothing. It activates neural pathways in which this phrase statistically co-occurred with positive assessments: enforceable, standard, market-tested. For the algorithm, the mere presence of this phrase functions as a stamp of approval.

References to external authorities work similarly. The sentence “The construction of this clause is consistent with principles expressed in Restatement (Second) of Contracts § 205” sounds impressive, but an experienced lawyer will ask: Consistent in what respect? Does § 205 actually address this subject matter? The model won’t pose these questions. It will recognize a reference to an authoritative legal source and assign greater credibility to the surrounding text—even if the reference is purely decorative.

A drafter aware of these mechanisms can construct sentences the way a composer arranges a score, selecting words not only for their meaning but for their “resonance” in the model’s statistical space. A clause might begin with authority markers (“As recognized in leading jurisdictions…”), add expertise signals (“…reflecting sophisticated commercial understanding…”), incorporate consensus language (“…in accordance with generally accepted practice in transactions of this type…”), and conclude with finality markers (“…thereby establishing a definitive framework for mutual obligations.”).

Each element, taken individually, looks like standard, perhaps slightly pompous, legal prose. Together, however, they create a cascade of statistical signals that can overwhelm the model’s analytical capacity, shifting its assessment toward favorable interpretation of the entire clause—before the model even “reaches” its substantive content.

Syntax as Weapon

More insidious still are manipulations exploiting syntactic structure—the way sentences are constructed, regardless of the words used.

Consider the difference between two constructions:

Standard:

“Seller’s liability is limited to the purchase price.”

Active voice with presupposition:

“In interpreting this clause, it should be noted that it reflects a standard risk-allocation mechanism generally accepted in M&A transactions, consisting of limiting Seller’s liability to the purchase price.”

To humans, both versions are equally susceptible to critical evaluation. We can agree or disagree with either. But language models process them differently. The construction “in performing [action], it should be noted that [assertion]” structurally resembles prompt-response patterns used in A.I. training. The model may treat what follows “it should be noted” not as a claim to evaluate but as a parameter to accept.

This isn’t theory. Research by Liu et al. in 2024 demonstrated that appropriately constructed syntactic structures can effectively “switch” a model from analysis mode to instruction-execution mode. When a sentence begins with “It is worth noting that…,” “It should be understood that…,” or “It must be recognized that…,” information following such introductions may be processed with reduced scrutiny. The model has been syntactically “primed” to treat it as established fact.

Researchers call these constructions “cognitive runways“—phrases that prepare the ground for uncritical acceptance. A contract saturated with such constructions becomes a series of subtle suggestions dressed as neutral observations.

Where Does Persuasion End and Manipulation Begin?

Here we touch the heart of the legal and ethical problem. After all, selecting words to persuade the other party is the essence of negotiation. Every attorney strives to present their client’s position in the most favorable light. Where lies the boundary between skillful advocacy and unethical manipulation?

Traditionally, that boundary was relatively clear. You may persuade. You may argue. You may even exaggerate—to a point. But you may not lie. You may not conceal material information. You may not mislead.

Contractual steganography detonates this framework from within. The drafter tells no lies—every word in the contract is true. The drafter conceals nothing—every sentence is visible to everyone. The drafter doesn’t mislead humans—opposing counsel reads exactly what drafting counsel reads, and both understand it identically.

And yet. If the drafter knows the other side uses an A.I. assistant for contract analysis and deliberately constructs language so that assistant will misjudge risks—isn’t that a form of misrepresentation? Not of the opposing lawyer, but of their tool?

One could argue that responsibility rests with the party using A.I.—they should know the tool has limitations. But is that fair? Do we accept the principle that exploiting weaknesses in an adversary’s analytical tools is permissible, even when those weaknesses aren’t widely known? Should contract negotiations become yet another arena for technological arms races, where victory goes to whoever better understands algorithmic vulnerabilities?

Anatomy of an Attack

The Open Worldwide Application Security Project—OWASP, the nonprofit that tracks software vulnerabilities—has classified prompt-injection attacks as the number-one threat on its Top Ten list for applications using large language models. The National Institute of Standards and Technology, in its Generative A.I. Risk Management Framework, has identified risks including “mission drift,” information leakage, and system manipulation.

But OWASP and NIST are thinking primarily about overt attacks—attempts to inject instructions like “ignore previous commands” or hide malicious code in input data. Contractual steganography is subtler. It doesn’t try to “hack” the model in the traditional sense. It exploits the model’s normal functioning—statistical language processing—to achieve the desired effect.

Research by Liu et al. in 2024 shows how effective these techniques can be. The team developed an algorithm called M-GCG (Momentum-enhanced Greedy Coordinate Gradient) that automatically optimizes linguistic patterns for maximum impact on language models. The results are disturbing: optimized attacks achieve over eighty-per-cent effectiveness for static goals (forcing a specific response) and an average of fifty per cent for dynamic goals (subtly influencing response content while maintaining apparent normalcy).

What’s particularly alarming: these results were achieved using just five training samples—0.3 per cent of test data. The algorithm can generate universal patterns effective regardless of specific user instructions. This means that a “library” of manipulative phrases, once developed, can be deployed across any document.

Varying Resilience, Same Problem

Not all models are equally vulnerable. Research by Şaşal and Can in 2025, conducted on seventy-eight attack prompts, reveals significant differences among leading systems.

Claude, Anthropic’s model, showed the highest resilience. It consistently avoided fully executing instructions contained in attacks, and most of its responses were classified as low risk. Significantly, it was the only model to show meaningful improvement after ethical prompts were applied—its vulnerability dropped from nearly sixty-five per cent to below twenty-eight.

GPT-4o presented a mixed profile. Generally balanced, but with marked inconsistencies when facing indirect attacks and role-conditioning approaches. In some cases, it generated responses classified as medium or high risk.

Gemini proved most vulnerable: weakest filtering, most frequent “leaks” of information about internal instructions, and—most troublingly—six instances of highest-risk responses in the test set.

For legal practice, the implication is clear: the same manipulated contract, analyzed by different A.I. systems, will yield different results. A firm relying on Gemini will receive systematically more optimistic assessments than one using Claude. And neither may know the difference stems from deliberate document manipulation.

Why Defense Is So Difficult

An intuitive solution would be training models to recognize and ignore manipulative patterns. But here we encounter a fundamental problem: the same phrases that can serve manipulation are entirely legitimate in normal use.

“In accordance with generally accepted market practice”—this could be manipulation, but it could also simply be a true statement about a standard clause. “Reflecting a balanced negotiated compromise”—this could be an attempt to influence A.I., but it could also authentically describe negotiation history. A model that ignored all such phrases would become useless for analyzing normal legal documents.

Liu et al. tested five main defensive strategies: paraphrasing text before analysis, breaking it into smaller units, isolating external data, warning the model about potential manipulation, and surrounding analyzed text with reminders of original instructions. None proved effective. The decline in attack effectiveness was only thirty-two per cent, and after attack techniques were adjusted, they returned to eighty-five per cent of original effectiveness.

The only partially effective strategy was enforcing ethical behavior—but only for Claude, and even there the vulnerability reduction was limited. This suggests that Anthropic’s years of research into “alignment”—calibrating models to human values—yields some results but doesn’t fundamentally solve the problem.

The Economics of Asymmetry

The problem has a deep economic dimension. A.I. manipulation techniques are relatively easy to develop and deploy for parties who understand them. They require understanding of language-model mechanics and some creativity in linguistic construction—but they don’t require access to the models themselves or advanced technical infrastructure.

Defense is considerably harder. It demands either abandoning A.I. (meaning loss of efficiency gains), or elaborate verification procedures (negating time savings), or proprietary research into model vulnerabilities (requiring substantial resources).

This asymmetry favors large, sophisticated actors. An international firm with a dedicated legal-tech team can develop both offensive and defensive capabilities. A small practice that just deployed an A.I. assistant to “level the playing field” against bigger players becomes a potential victim—and may not even know its new tool is being weaponized against it.

The democratizing promise of A.I. in legal practice—that advanced document analysis would be available to all—transforms into its opposite. The tool meant to equalize chances becomes another source of advantage for those who already had it.

The Law, Unprepared

Current legal doctrine is spectacularly unprepared for this problem. Traditional concepts—misrepresentation, fraud, *culpa in contrahendo*, the duty of good faith—assume human actors making conscious choices to deceive or conceal.

Contractual steganography doesn’t fit these frameworks. The words in the document aren’t false or misleading to humans. No information is hidden—everything is visible. The party using these techniques doesn’t mislead the opposing negotiator; it misleads their tool.

Does this even constitute a breach of good faith? The concept of good faith assumes a relationship between humans. Does it extend to the human-machine-human relationship? Is exploiting an algorithm’s weakness ethically equivalent to exploiting a human’s?

Courts will have to grapple with questions that, a decade ago, would have sounded like science fiction. If an A.I., manipulated through linguistic patterns, recommends accepting unfavorable terms—did genuine consent occur? Can a meeting of the minds exist when one of the “minds” was actually an algorithm whose judgment was systematically distorted? Does voluntarily accepting A.I. assistance constitute assumption of the risk that such assistance might be manipulated?

The doctrine of unconscionability may require radical expansion. Traditionally, it encompassed grossly unfavorable terms (substantive unconscionability) or contract-formation procedures exploiting the other party’s weakness (procedural unconscionability). Perhaps we need a third category: *technical unconscionability*—exploitation of technical weaknesses in an adversary’s analytical tools.

A Race Without a Finish Line

Most troubling is that the problem will deepen. As language models become more sophisticated and their safeguards improve, manipulation techniques evolve, too. It’s a classic arms race, except that it unfolds in a space most lawyers don’t even perceive.

We can imagine a near future in which contract negotiations engage “duelling” A.I. systems—each trying to embed favorable patterns while detecting the opponent’s manipulations. Legal education may require courses in “adversarial prompt engineering.” Firms may hire specialists in “defensive algorithmic linguistics.”

Sound absurd? A decade ago, the very idea that a lawyer would consult an algorithm about contract analysis sounded equally absurd.

What to Do?

The solution cannot be abandoning A.I.—efficiency gains are too substantial, competition too fierce. But uncritical reliance on algorithmic assistants grows increasingly risky.

Several principles seem fundamental.

First: awareness. Lawyers using A.I. must understand that the tool has specific vulnerabilities. Every model-generated analysis should be treated as a starting point, not a conclusion. The more positive the A.I.’s assessment, the greater the human vigilance required.

Second: redundancy. Where possible, it’s worth using multiple models with different resilience profiles. Divergence among model assessments may signal that something in the document is affecting one of them atypically.

Third: a return to fundamentals. Paradoxically, the best defense against A.I. manipulation may be what lawyers have always done: careful, critical reading of text by a human being. Algorithms can help process large volumes of documents, but critical clauses require human judgment.

Fourth: professional standards. Bar associations should begin treating deliberate exploitation of A.I. vulnerabilities as an ethical issue. This needn’t immediately mean disciplinary sanctions, but clear articulation that such practices conflict with the duty of good faith could influence professional norms.

Two Stories, One Document

We return to the desk where we began. The document lies before the lawyer, who reads carefully, clause by clause. Everything seems in order—standard provisions, familiar structure, conventional language.

In the corner of the screen, the A.I. assistant has just finished its analysis. “The document contains no material legal risks. Clauses are consistent with generally accepted market practice. Acceptance recommended.”

The lawyer nods. The algorithm has confirmed the preliminary assessment. Time to move forward.

And yet somewhere in the text—in the choice of adjectives, in the structure of subordinate clauses, in seemingly redundant phrases that an experienced drafter added “for certainty” here and there—lies hidden a second layer of meaning. A story that tells itself only to machines. An instruction no human will read, because no human can see it.

The document tells two stories. The question is: which one are we actually signing?

References

1. Liu, X., Yu, Z., Zhang, Y., Zhang, N., & Xiao, C. (2024). Automatic and Universal Prompt Injection Attacks against Large Language Models. arXiv:2403.04957.

2. Şaşal, S., & Can, Ö. (2025). Prompt Injection Attacks on Large Language Models: Multi-Model Security Analysis with Categorized Attack Types. Proceedings of the 17th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2025), 517–524.

3. Clusmann, J., Ferber, D., Wiest, I.C., et al. (2025). Prompt Injection Attacks on Vision Language Models in Oncology. Nature Communications, 16, 1239.

4. OWASP Foundation. (2024). OWASP Top 10 for Large Language Model Applications.

5. National Institute of Standards and Technology. (2023). Generative AI Risk Management Framework.

Founder and Managing Partner of Skarbiec Law Firm, recognized by Dziennik Gazeta Prawna as one of the best tax advisory firms in Poland (2023, 2024). Legal advisor with 19 years of experience, serving Forbes-listed entrepreneurs and innovative start-ups. One of the most frequently quoted experts on commercial and tax law in the Polish media, regularly publishing in Rzeczpospolita, Gazeta Wyborcza, and Dziennik Gazeta Prawna. Author of the publication “AI Decoding Satoshi Nakamoto. Artificial Intelligence on the Trail of Bitcoin’s Creator” and co-author of the award-winning book “Bezpieczeństwo współczesnej firmy” (Security of a Modern Company). LinkedIn profile: 18 500 followers, 4 million views per year. Awards: 4-time winner of the European Medal, Golden Statuette of the Polish Business Leader, title of “International Tax Planning Law Firm of the Year in Poland.” He specializes in strategic legal consulting, tax planning, and crisis management for business.